Nvidia Unleashes AI Ecosystem for Competitors Worldwide Expansion

(The News Pulse) -- NVIDIA Corporation CEO Jensen Huang disclosed strategies allowing customers to utilize competitors' processors within data centers based on their technology. This step recognizes the rise in internal chip manufacturing among key clients such as Microsoft Corp. and Amazon.com Inc.

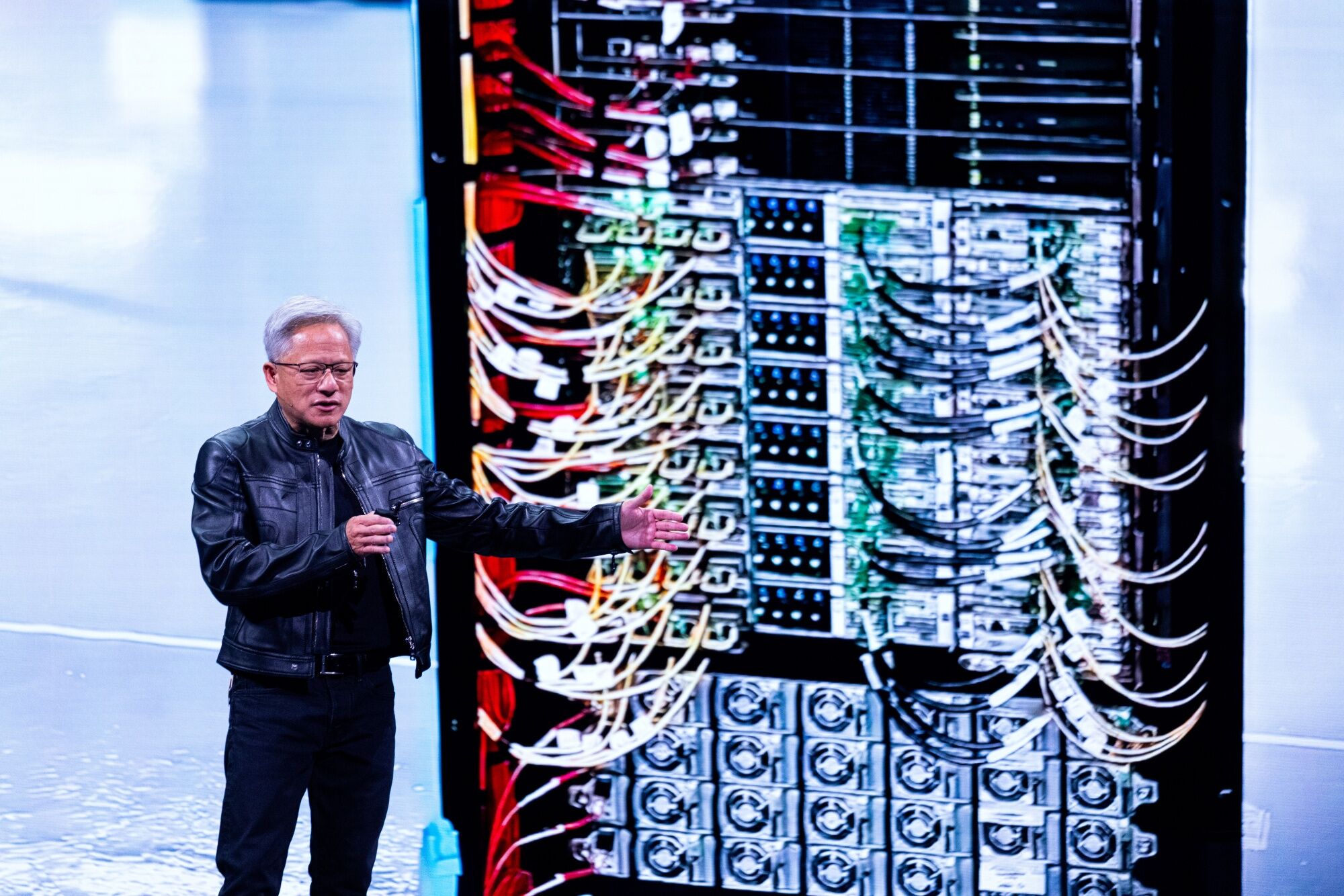

On Monday, Huang launched Computex in Taiwan, which stands as Asia's premier electronics exhibition. He spent most of his roughly two-hour speech honoring the efforts of local suppliers within the supply chain. However, his principal announcement was about a fresh initiative. NVLink Fusion A system enabling the creation of highly tailored artificial intelligence infrastructure, integrating Nvidia’s rapid connectivity solutions with components sourced from various suppliers for the very first time.

So far, Nvidia has exclusively provided fully assembled computers featuring its proprietary parts. By adopting this new approach, data centers gain greater adaptability and foster some level of competition, all while maintaining reliance on Nvidia's technologies. The introduction of NVLink Fusion products enables clients to integrate their chosen central processors with Nvidia’s artificial intelligence chips, as well as opt for dual Nvidia hardware alongside an alternative firm’s AI accelerators.

Nvidia, based in Santa Clara, California, is eager to strengthen its position amid the AI surge. Currently, both investors and certain executives are uncertain about expenditures related to data centers. sustainable The technology sector is similarly grappling with significant issues regarding how the tariff policies implemented during the Trump administration might disrupt worldwide demand and production.

It provides an opening for large-scale cloud providers to develop their own customized silicon incorporating NVLink technology. Their decision to proceed will hinge on whether these companies believe NVIDIA will remain dominant and central to the industry," explained Ian Cutress, lead analyst at research firm More Than Moore. "However, I anticipate some may avoid this option to minimize their dependency on the NVIDIA ecosystem.

In addition to the data center launch, Huang discussed various product improvements on Monday, ranging from quicker software to optimized chip configurations aimed at boosting AI service performance. This stands in contrast to what was announced during the 2024 edition, where the Nvidia CEO addressed different topics. unveiled The upcoming versions of the Rubin and Blackwell platforms aim to invigorate a technology sector that was looking for opportunities to capitalize on the surge following the ChatGPT wave. Nvidia saw a decline of over 3% during pre-market trading, reflecting a wider sell-off in the tech industry.

On Monday, the stock prices of the company’s two key Asian allies, Taiwan Semiconductor Manufacturing Co. and Hon Hai Precision Industry Co., dropped over 1%, indicative of an overall downturn in the market.

Huang kicked off Computex with information about the upcoming release schedule for Nvidia’s new GB300 systems, set for later this year during the third quarter. These will be enhancements over the present flagship Grace Blackwell systems currently rolling out to various cloud service operators.

According To What News Pulsetelligence Indicates

The availability of GB300 server increases in the second half will be crucial. Additionally, we expect that the overall demand for AI servers will come under closer examination due to current economic and geopolitical instabilities.

- Steven Tseng, analyst

Tap here to view the research.

At Computex, Huang likewise unveiled a new option. RTX Pro Server The system he mentioned provides four times better performance compared to Nvidia’s previous top-tier H100 AI platform when handling DeepSeek tasks. Additionally, the RTX Pro Server demonstrates an improvement of 1.7 times for certain workloads involving Meta Platforms Inc.'s Llama models. According to Huang, this new offering has commenced mass production.

On Monday, he took time to express his gratitude towards numerous suppliers ranging from TSMC to Foxconn, who play crucial roles in manufacturing and distributing Nvidia’s technology globally. According to Huang, Nvidia plans to collaborate with these entities as well as the Taiwanese government to construct an AI supercomputer for Taiwan. Additionally, they intend to develop a substantial new office facility in Taipei.

"When new markets need to be established, they must begin right here, at the heart of the computer ecosystem," Huang, who is 62 years old, stated regarding his homeland.

Although Nvidia continues to dominate the market with its cutting-edge AI processors, rivals and collaborators are all striving to create competitive semiconductor alternatives. This push comes from businesses aiming either to increase their market presence or diversify their supplier options for these expensive, high-profit components. Significant clients like Microsoft and Amazon are also venturing into designing custom pieces, which could potentially reduce Nvidia’s indispensability within data center operations.

Nvidia’s initiative to expand its AI server ecosystem has garnered support from multiple partners who have agreed to join. Companies like MediaTek Inc., Marvell Technology Inc., and Alchip Technologies Ltd. are set to develop specialized AI chips compatible with Nvidia’s processing units, as stated by Huang. Additionally, Qualcomm Inc. and Fujitsu Ltd. intend to produce customized processors designed to function alongside Nvidia’s accelerator systems within their computer setups.

NVIDIA's compact systems—the DGX Spark and DGX Station, unveiled earlier this year—are set to expand their availability through a wider array of distributors. Starting this summer, local partners such as Acer Inc. and Gigabyte Technology Co. will join the ranks of providers for these mobile and desktop units alongside current offerings from Dell Technologies Inc. and HP Inc., according to NVIDIA.

The firm additionally delved into fresh software designed for robots aimed at speeding up their training through simulations. Huang emphasized the possibilities and swift expansion of humanoid robots, viewing this area as possibly the most thrilling path for what’s referred to as embodied AI.

NVIDIA has announced that it will offer comprehensive blueprints designed to speed up the creation of "AI factories" for businesses. This service aims to assist companies lacking internal knowledge of the complex steps involved in constructing their own artificial intelligence data centers.

The firm additionally launched a new software termed DGX Cloud Lepton. It aims to serve as a tool for facilitating cloud computing providers like CoreWeave Inc. and SoftBank Group Corp., enabling them to streamline the connection between AI developers and the computational resources required for developing and deploying their applications.

--Assisted by Debby Wu and Nick Turner.

Top Reads from The News Pulse

- How a Highway Became San Francisco’s Newest Park

- America, ‘Nation of Porches’

- Maryland’s Credit Rating Gets Downgraded as Governor Blames Trump

- NJ Transit Train Engineers Strike, Disrupting Travel to NYC

- NYC Commuters Brace for Chaos as NJ Transit Strike Looms

©2025 The News PulseL.P.

Post a Comment for "Nvidia Unleashes AI Ecosystem for Competitors Worldwide Expansion"

Post a Comment